42 confident learning estimating uncertainty in dataset labels

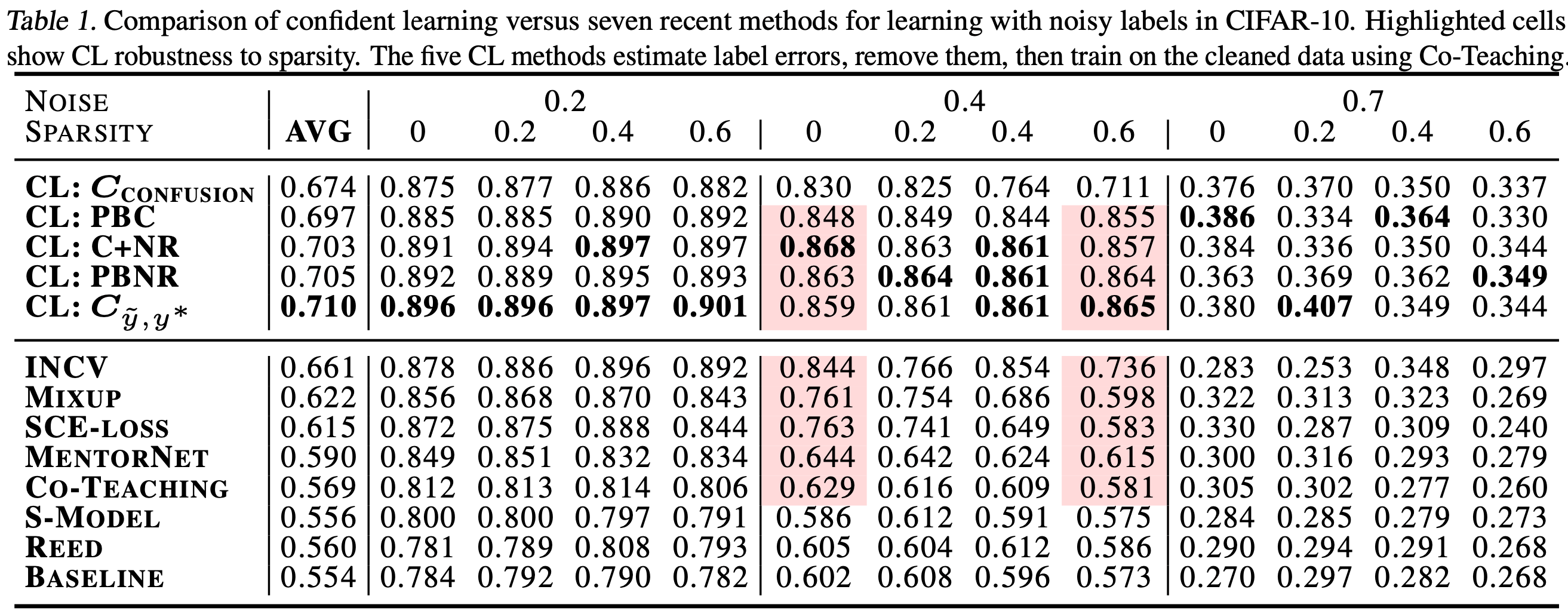

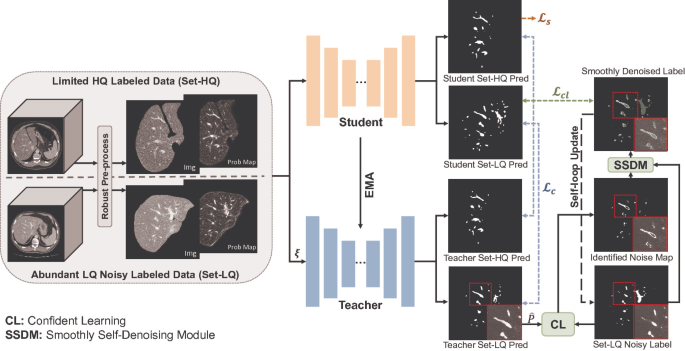

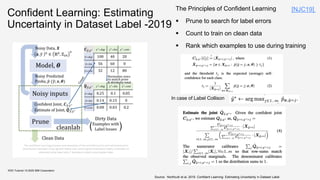

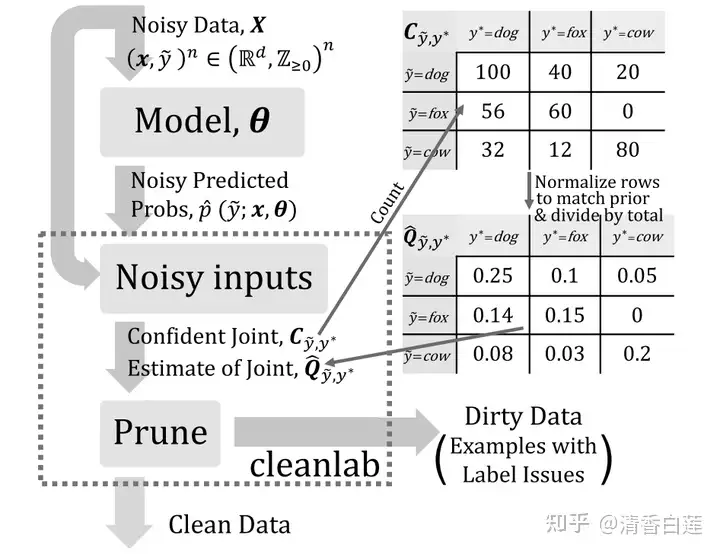

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Learning exists in the context of data, yet notions of $\textit{confidence}$ typically focus on model predictions, not label quality. Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence.

Confident Learning -そのラベルは正しいか?- - 学習する天然ニューラルネット これは何? ICML2020に投稿された Confident Learning: Estimating Uncertainty in Dataset Labels という論文が非常に面白かったので、その論文まとめを公開する。 論文 [1911.00068] Confident Learning: Estimating Uncertainty in Dataset Labels 超概要 データセットにラベルが間違ったものがある(noisy label)。そういうサンプルを検出 ...

Confident learning estimating uncertainty in dataset labels

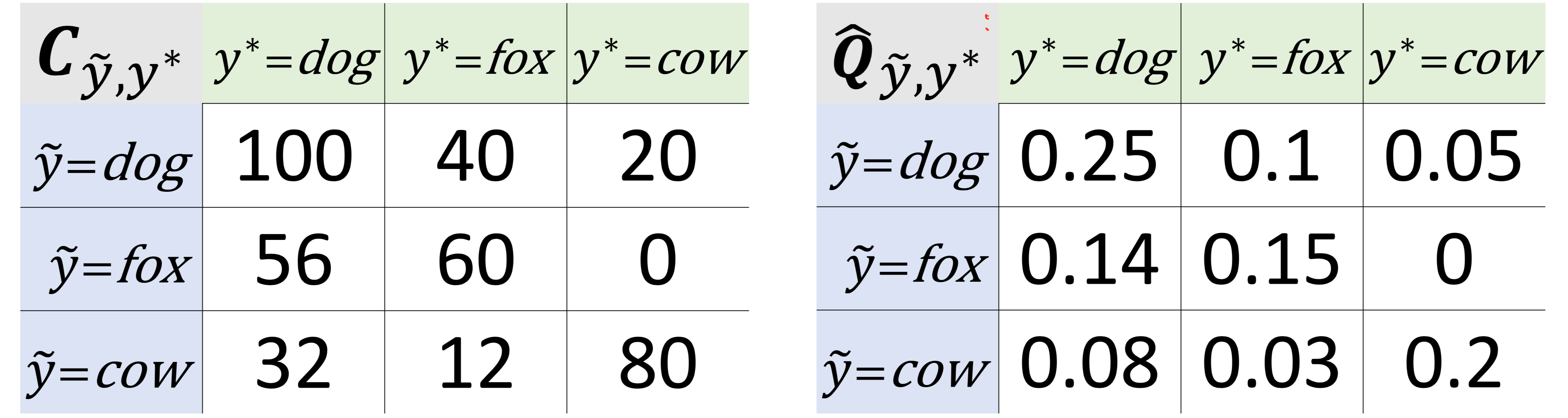

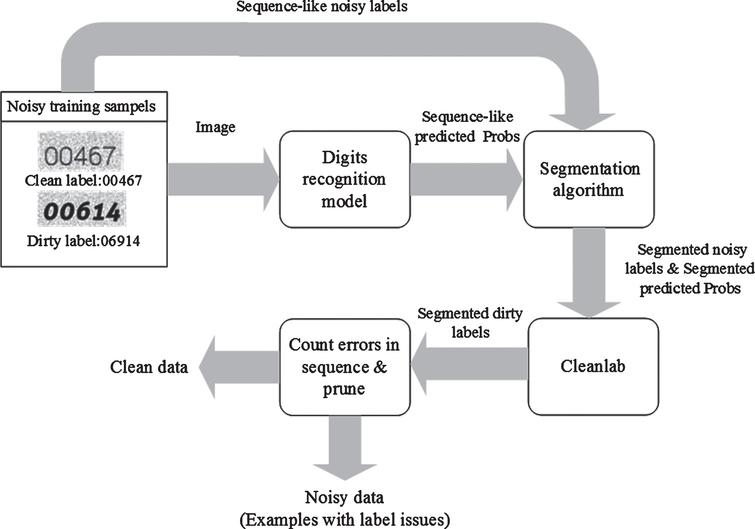

PDF Confident Learning: Estimating Uncertainty in Dataset Labels - ResearchGate Confident learning (CL) has emerged as an approach for character- izing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to... Confident Learning: Estimating Uncertainty in Dataset Labels - ReadkonG 3. CL Methods Confident learning (CL) estimates the joint distribution between the (noisy) observed labels and the (true) latent labels. CL requires two inputs: (1) the out-of-sample predicted probabilities P̂k,i and (2) the vector of noisy labels ỹk . The two inputs are linked via index k for all xk ∈ X. An Introduction to Confident Learning: Finding and Learning with Label ... An Introduction to Confident Learning: Finding and Learning with Label Errors in Datasets Curtis Northcutt Mod Justin Stuck • 3 years ago Hi Thanks for the questions. Yes, multi-label is supported, but is alpha (use at your own risk). You can set `multi-label=True` in the `get_noise_indices ()` function and other functions.

Confident learning estimating uncertainty in dataset labels. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Whereas numerous studies have developed ... Confident Learning: Estimating Uncertainty in Dataset Labels - Researchain Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

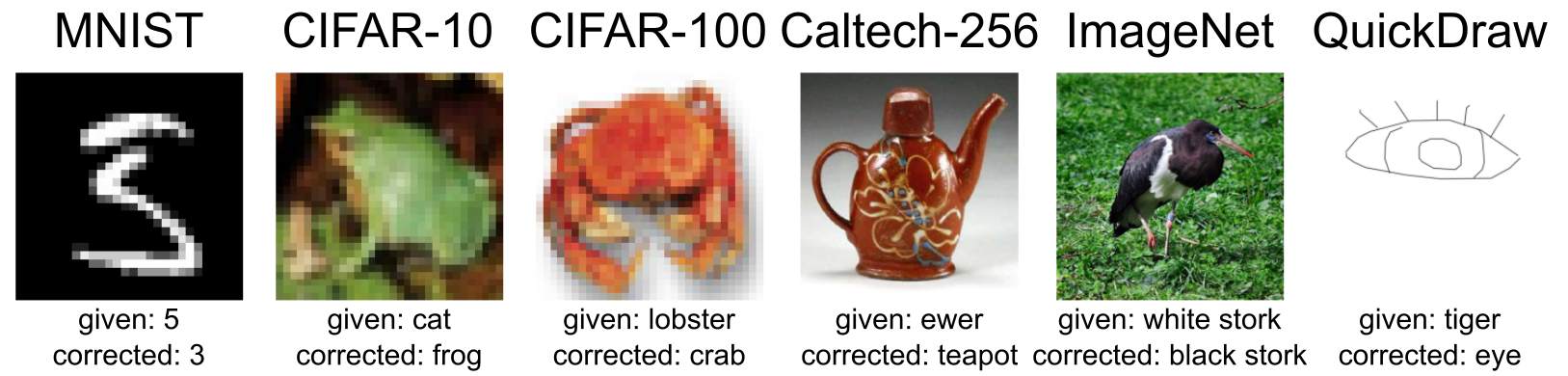

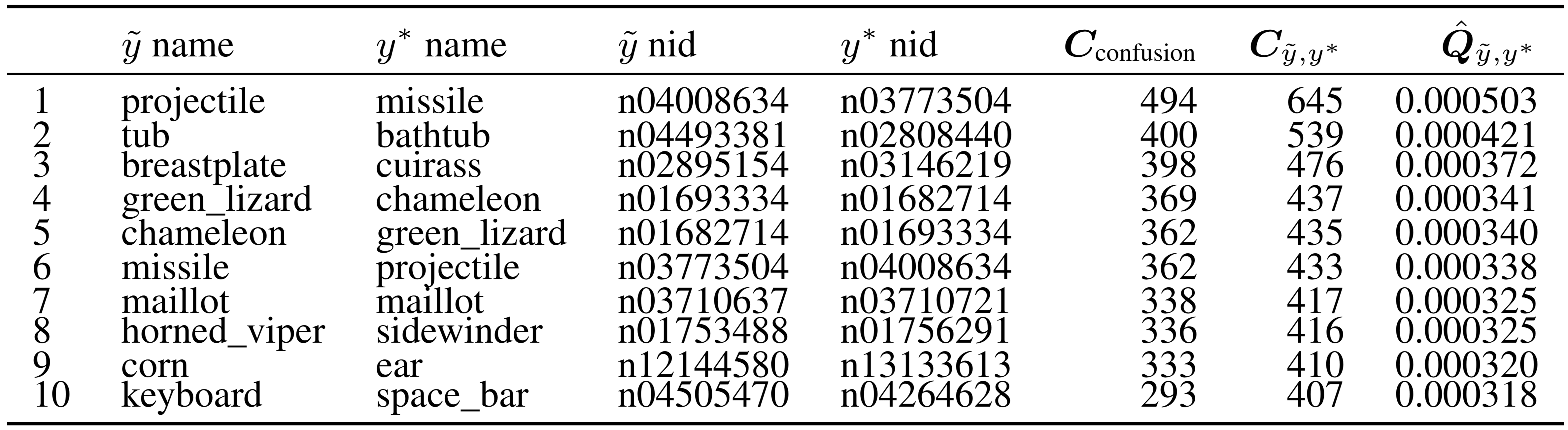

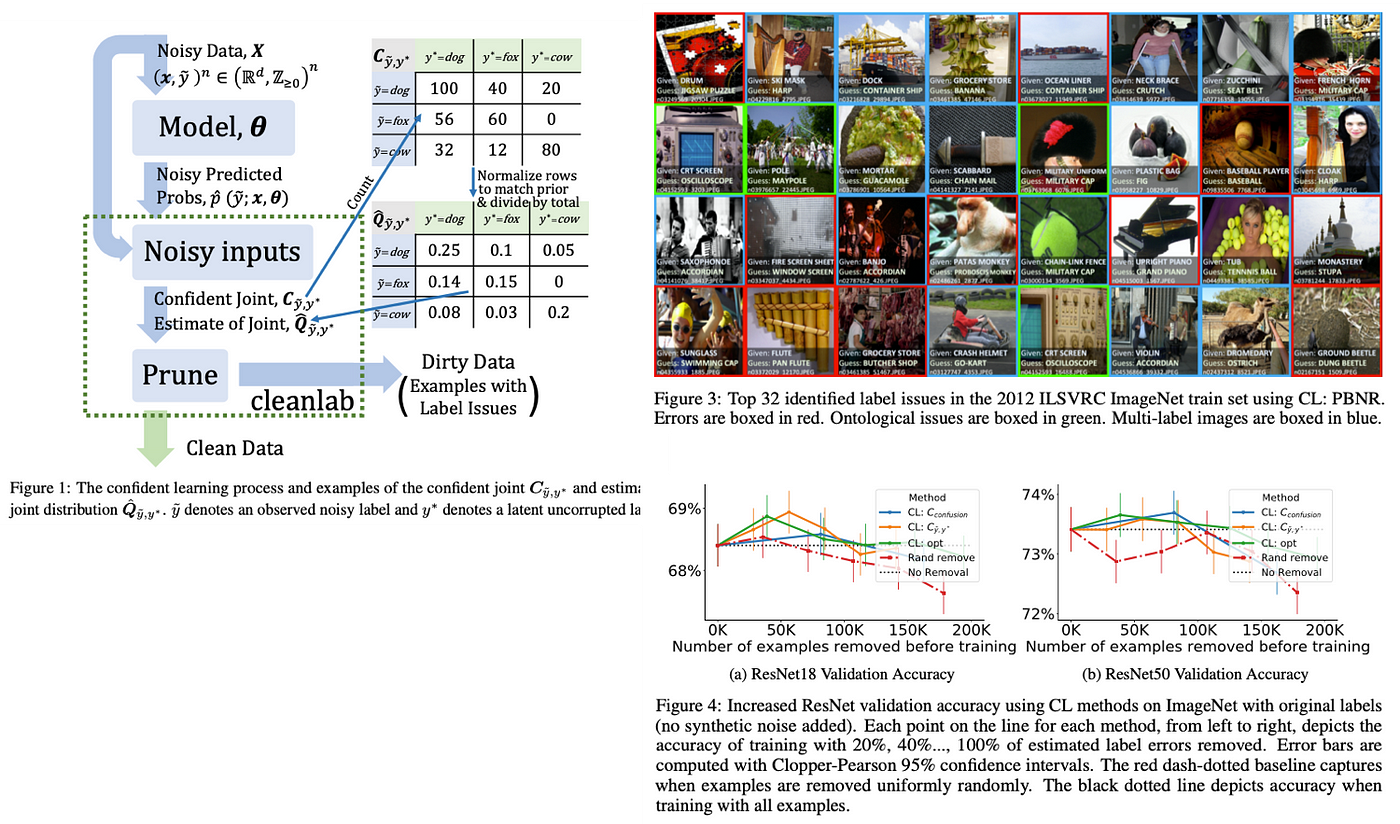

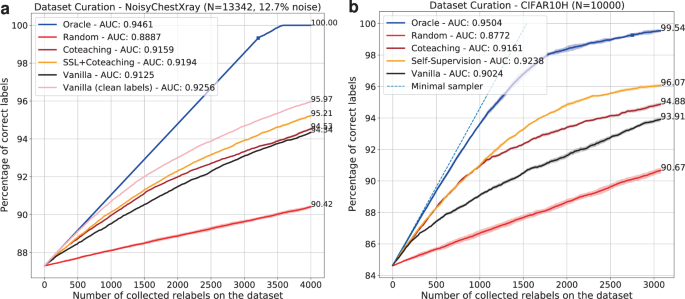

Confident Learning: Estimating Uncertainty in Dataset Labels - "Confident Learning: Estimating Uncertainty in Dataset Labels" Figure 4: Increased ResNet validation accuracy using CL methods on ImageNet with original labels (no synthetic noise added). Each point on the line for each method, from left to right, depicts the accuracy of training with 20%, 40%..., 100% of estimated label errors removed. Confident Learning: Estimating Uncertainty in Dataset Labels Figure 3: Top 32 identified label issues in the 2012 ILSVRC ImageNet train set using CL: PBNR. Errors are boxed in red. Ontological issues are boxed in green. Multi-label images are boxed in blue. - "Confident Learning: Estimating Uncertainty in Dataset Labels" An Introduction to Confident Learning: Finding and Learning with Label ... An Introduction to Confident Learning: Finding and Learning with Label Errors in Datasets Curtis Northcutt Mod Justin Stuck • 3 years ago Hi Thanks for the questions. Yes, multi-label is supported, but is alpha (use at your own risk). You can set `multi-label=True` in the `get_noise_indices ()` function and other functions. Confident Learning: Estimating Uncertainty in Dataset Labels - ReadkonG 3. CL Methods Confident learning (CL) estimates the joint distribution between the (noisy) observed labels and the (true) latent labels. CL requires two inputs: (1) the out-of-sample predicted probabilities P̂k,i and (2) the vector of noisy labels ỹk . The two inputs are linked via index k for all xk ∈ X.

PDF Confident Learning: Estimating Uncertainty in Dataset Labels - ResearchGate Confident learning (CL) has emerged as an approach for character- izing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to...

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/26-Figure11-1.png)

![R] Announcing Confident Learning: Finding and Learning with ...](https://external-preview.redd.it/p3HQXQzdkmyXyJ89enL6PkgmSdstFY5z1QkzOzRNUaU.jpg?auto=webp&s=01b29ed2d2e90d072e1fc7295da2c1cb3797f686)

![Active Learning in Machine Learning [Guide & Examples]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/633a98dcd9b9793e1eebdfb6_HERO_Active%20Learning%20.png)

![R] Announcing Confident Learning: Finding and Learning with ...](https://external-preview.redd.it/JyNe3XlRAW8dLTeUjHMVD_W3Exh6KXJv0Znqhd6aE7E.jpg?auto=webp&s=c16c72d9b65f4dee5f0859b36de39811ba27404d)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/2-Figure1-1.png)

Post a Comment for "42 confident learning estimating uncertainty in dataset labels"